Attempting to enhance video images can be very frustrating. While a video image may have been sampled and digitized at, say, a resolution of 576x768 pixels (the nominal PAL standard size) its underlying resolution is probably no more than about 250x300 pixels. This is fixed by the television transmission standard. Video images are interlaced - one field of the image displayed in the even numbered rows of the image and the other field in the odd numbered rows. These two fields are recorded and refreshed 1/50th of a second apart. Thus a complete frame is refreshed every 1/25th of a second. If there is motion in the scene the interlacing of fields recorded at different instants in time will result in a fringing effect around the boundary of the object. If the video is recorded in, say, 12 hour mode the interval between fields will be much greater making this effect worse.

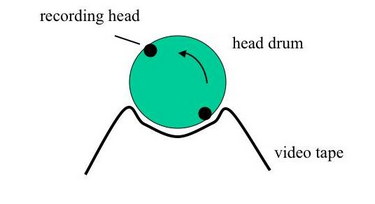

The other thing to note is that the two fields are recorded to video tape by separate heads on opposite sides of the recording drum. The quality and characteristics of the two recorded fields can be quite different. The magnetic flux generated by these two heads can be different, and differing amounts of dirt may have accumulated over the heads. Accordingly you often have to consider the image as being composed of two very separate images even if there is no motion in the scene.

Deinterlace the image using the following call to extractfields. This function extractes the two fields from with the image, one made up of the odd numbered rows, and the other from the even rows. Here we have requested that missing rows are filled in by interpolating the rows above and below.

>> [f1,f2] = extractfields(im,'interp');

We now have two images that are clearer than the original and you can see how different the two fields can be. Note also the different positions of the people in the two fields.

|

|

| Field 1 | Field 2 |

As you can see above the chrominance (colour) information can be rather poor, however the luminance information (grey values) can be OK and it is often useful to convert colour images to greyscale.

>> gf1 = rgb2gray(f1); >> gf2 = rgb2gray(f2);

|

|

| Field 1 | Field 2 |

If you want to attempt some noise removal it is best to deinterlace the images without filling in missing rows by interpolation. Simply extract the half height images. If we were to interpolate the missing rows these would have different noise characteristics relative to the non-interpolated rows. This would interfere with the denoising process.

Note also that when we convert the images to grey scale image the extracted fields are cast to type double beforehand. This ensures the final grey values are floating point values and not rounded integer values. Thus, we preserve as much of the image information as possible. Note that we divide the cast image values by 255 to provide floating point values between 0 and 1 which is what rgb2gray seems to want if you pass data of type double to it.

>> [hf1,hf2] = extractfields(im); >> ghf1 = rgb2gray(double(hf1)/255); >> ghf2 = rgb2gray(double(hf2)/255);

|

|

| Field 1 | Field 2 |

Try wavelelet denoising with the following parameters (see the help info for noisecomp to see what these are)

>> k = 2; >> nscale = 7; >> mult = 2.5; >> norient = 6; >> softhard = 1; >> nf1 = noisecomp(ghf1, k, nscale, mult, norient, softhard); >> nf2 = noisecomp(ghf2, k, nscale, mult, norient, softhard);

|

|

| Denoised Field 1 | Denoised Field 2 |

Finally fill in the missing rows by interpolation and manually adjust the contrast and saturation levels to taste.

>> ff1 = interpfields(nf1); >> ff2 = interpfields(nf2);

|

|

| Denoised and contrast adjusted Field 1 | Denoised and contrast adjusted Field 2 |

While the final result may be an improvement on the original it still leaves a lot to be desired. Over the years I have come to the conclusion that surveillance video, even under ideal conditions, is useless for identification purposes. The inherent resolution is just too low.

Indeed I have done some basic experiments by placing a standard optometrist's eye chart in front of some CCD cameras. Typically the camera's 'eye sight' would only be classed as being 6/24 to 6/48 vision (20/80 to 20/160 in imperial). What this means is that if someone with normal sight can see something at 48 metres, the camera would have to be placed only 6 metres away to see the same detail. This is not unexpected, after all the human eye has about 100 million light sensitive cells and a CCD camera has less than half a million pixels.

Good Luck!